Largely the province of military and government powers throughout history, cryptography has evolved significantly over time. From its secretive beginnings as a way to keep sensitive written messages safe from enemies, cryptography has morphed into multiple uses for confidentiality and integrity. This method of hidden (from the Greek kryptόs) writing (from the Greek gráphō) has moved from clay tablets to parchment and paper, to the telegraph line, to eventually underpinning the electronic code that facilitates communication and online transactions in our modern lives. For a glimpse into how cryptography has advanced through the ages, read on:

A Mesopotamian Trade Secret

A Mesopotamian Trade Secret

One of the earliest examples of cryptography comes from Mesopotamia circa 1500 B.C. A clay tablet was found near the banks of the Tigris with a simply encoded bit of text, discovered to be a pottery glaze recipe. The writer used a basic substitution cipher to hide the recipe, which must have been valuable at the time. This early attempt by an industrial espionage-fearing craftsman demonstrates how long we’ve been trying to keep our writing away from prying eyes.

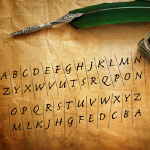

Ancient Opposites

Ancient Opposites

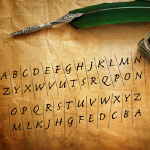

The Atbash cipher was invented by the Hebrews as a quick way to encode the written word. It’s a simple substitution cipher that reverses all letters of the alphabet. It can work with any language that has a set alphabetical order, which makes it easy to use—but also quite easy to crack. Atbash was widely used in the Hebrew context to create a parody of names and places, quickly conceal mildly sensitive information, or avoid writing a politically or religiously significant word.

Warrior Writing

Warrior Writing

The Spartans used a two-piece method of cryptography for encrypting military messages called a Scytale, which was a long strip of parchment printed with letters which would be wrapped around a rod of a certain width to determine how the letters should line up. Only someone with the same width of rod could wrap the parchment correctly and read the message exactly how it was intended—making the rod like a sort of shared key. While effective, this method was vulnerable to theft and suggested by its form how an enemy could go about cracking it. Because of its vulnerabilities, it may be that the scytale was used as a method to authenticate a recipient, rather than just to encode truly sensitive material.

The Emperor's Code

The Emperor's Code

This simple substitution cipher was named after Julius Caesar because he notoriously used it in all of his personal correspondence, as well as when transmitting sensitive messages in a military context. It uses a “shift” by a certain number of letters within the alphabet to encode writing. This may seem weak to modern eyes, but since most people were illiterate, any level of encryption was much stronger in Caesar’s time. This method continued to be widely used for centuries and has been the foundation of stronger methods of encryption.

A Golden Age Discovery

A Golden Age Discovery

Islamic scholar and polymath Al-Kindi devised a way to break classical (monoalphabetic) substitution ciphers (like the Caesar cipher) in his 8th century treatise, On Decrypting Encrypted Correspondence. A monoalphabetic substitution cipher is a code that uses one alphabet to encrypt an entire text. Frequency analysis is a statistical method that relies on the fact that certain letters and letter combinations are more common than others. By analyzing the frequency of encoded letters in the encrypted text, the reader can crack the alphabet and thereby decode the message, harnessing the limitations of language itself.

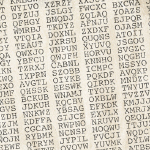

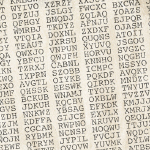

The More the Merrier While Al-Kindi was the first to publish the way to crack monoalphabetic ciphers, others followed in Europe, which meant that a new way to encode text was needed. Enter the polyalphabetic cipher, which uses multiple sets of letters in different orders to encrypt a text. Polyalphabetic encryption was first described by Leon Battista Alberti in 1467, who used a metal disk to switch between alphabets. The “tabula recta” was invented by the Benedictine monk Trithemius in 1518 and was a square table of multiple alphabets. Each row of the table showed the alphabet repeated, shifted one letter to the left. The user of this table would also be able to devise a polyalphabetic cipher. This table was used by Giovan Battista Bellaso in his treatise on an autokey cipher, which is a code that contains part of the message itself in the key. Blaise de Vigenere wrote about the same concept years later, which ended up being attributed to him rather than Bellaso, its rightful creator.

The More the Merrier While Al-Kindi was the first to publish the way to crack monoalphabetic ciphers, others followed in Europe, which meant that a new way to encode text was needed. Enter the polyalphabetic cipher, which uses multiple sets of letters in different orders to encrypt a text. Polyalphabetic encryption was first described by Leon Battista Alberti in 1467, who used a metal disk to switch between alphabets. The “tabula recta” was invented by the Benedictine monk Trithemius in 1518 and was a square table of multiple alphabets. Each row of the table showed the alphabet repeated, shifted one letter to the left. The user of this table would also be able to devise a polyalphabetic cipher. This table was used by Giovan Battista Bellaso in his treatise on an autokey cipher, which is a code that contains part of the message itself in the key. Blaise de Vigenere wrote about the same concept years later, which ended up being attributed to him rather than Bellaso, its rightful creator.

Grand Deceptions

Grand Deceptions

The French were notoriously adept users of cryptography for governmental and military purposes, and the “Sun King” Louis XIV lived up to that reputation. He employed several royal cryptographers, including members of the famous French cryptographer family, the Rossignols. Father and son Antoine and Bonaventure Rossignol devised the “Grand Cipher” or “Great Cipher” in the mid-17th Century for use by the King (and by extension, the French government). Named because it was assumed to be unbreakable, this incredibly complex polyalphabetic code wasn’t cracked for 250 years. Unfortunately, it was so complex that it had already fallen out of use by the French years before.

A Founding Father's Innovation

A Founding Father's Innovation

One of America’s founding fathers can be credited with the invention of a homegrown encryption method that was still used (albeit with some modifications) by the American Navy until quite recently. Thomas Jefferson invented his wheel cipher in 1795, a simple device using a set of wheels imprinted with the alphabet in random order. Depending on the order in which the wheels were placed on an axle, a single readable message would appear. If the recipient didn’t know what order to place the wheels, they would only ever see gibberish.

The Once and Future Unbreakable Cipher

The Once and Future Unbreakable Cipher

Described first by Frank Miller in 1882 for securing telegraph messages and reinvented in 1917 by AT&T engineer Gilbert Vernam, the one-time pad (OTP)—not to be confused with the one-time password—works by using a secret, pre-shared key to encrypt a message. If the key is completely random, at least as long as the message being encrypted is never reused, and is kept completely secret, one-time pads are unbreakable. The text is encrypted by combining it with corresponding text on the key using a mathematical technique called modular addition. Vernam’s version used punched tape, but later applications often used notepads, since the top sheet could be easily torn off and discarded. This method of encryption became part of the playbook for 20th century spycraft and sensitive diplomatic communication.

Machine Ciphers

Machine Ciphers

In 1917, Edward Hebern came up with a novel idea: create a machine to automatically encode messages by scrambling an alphabet while typing. To decipher messages typed on one of Hebern’s machines (which was a combination of an electric rotor and a typewriter), users simply had to insert the rotor backwards and input the scrambled message, which would result in the unscrambled original. While the original Hebern rotor machine had some unfortunate flaws and was relatively weak, later improved versions would address those flaws. During this time, other inventors were coming up with similar ideas and building on one another’s work, resulting in a proliferation of new cipher machines which would be put to extensive use during wartime.

Enigmatic Enemies

Enigmatic Enemies

The German Enigma machine was a rotor machine used in World War II that was similar in concept to Hebern’s original idea, with settings that operators would change daily according to monthly codebooks. A combination of more advanced encryption technology and rigid practice allowed Germans to automatically code their wartime communications with a seemingly unbreakable cipher. Luckily, the Enigma code was not unbreakable. Thanks to a combination of savvy cryptanalysis (code-breaking) and espionage led by the Polish (with the help of a French spy), Enigma was broken. Later, the British were able to devise a machine to automatically break Enigma. Some speculate that had the German code not been broken, the war may have gone on much longer.

Going Public—Decades Later

Going Public—Decades Later

Much of what we do online is facilitated by asymmetric Public Key cryptography, a concept based on the idea of “non-secret encryption” first theorized by James Ellis in 1970. The British Government kept Ellis’s concept a secret but continued to work on improving it. In 1973, Clifford Cocks developed a workable algorithm for achieving Ellis’s concept using prime factorization, which the Brits were unable to use but kept classified. An equivalent algorithm was independently invented and publicly described in 1977 by Rivest, Shamir and Adleman, whose initials give the algorithm its name. It’s unclear how history may have been changed had Cocks’ invention been made public earlier, preceding RSA’s invention in the U.S. His achievements in cryptography remained secret for 27 years and were only declassified in 1997, and he has since been honored for his work in mathematics and cryptography. Today, public key architecture (PKI) continues to allow us to secure and authenticate user activity.

War on Private Crypto

War on Private Crypto

Because cryptography (and the encryption technology that grew out of it) had almost exclusively been used by the military in the Cold War, the U.S. Government controlled the export of encryption products and included them on its list of United States Munitions. After the U.S. introduced the Data Encryption Standard (DES) in 1975, private enterprise began to dabble more seriously in encryption. Only large corporations with rich customers were able to jump through the hoops necessary to obtain export licenses from the government for encryption products in this era, however. Tightly held, heavily regulated, and politically sensitive, encryption wasn’t something just anyone could develop or sell. After the advent of the PC, things began to change. E-commerce exponentially increased the number of financial transactions online. Netscape introduced SSL technology to secure credit card transactions with PKI, but weren’t allowed to export their secure 128-bit version outside the U.S. Cases like Bernstein vs. the United States and Junger vs. Daley challenged the government’s stranglehold on encryption, and the fact that restrictions were hampering economic advancement in the U.S. tech sector put pressure on the government. Finally, President Clinton signed an executive order in 1996 that relaxed regulations, took encryption off the Munitions list, and ushered in a new era of crypto for all.

The CryptOlympics

The CryptOlympics

By the late 90s it was clear that DES and its 56-bit key length were defunct thanks to increasing levels of computing power. Trying to prevent cracking led to adaptations like Triple DES, which is secure but slow, and alternate “drop-in” key lengths, including a 256-bit key, that use the DES 64-bit block size. Sensing the need for a new standard that would be faster and more efficient, the NIST created the Advanced Encryption Standard (AES) contest in 1997. Out of 15 submissions from 50 top cryptologists around the world, the NIST selected Rijndael, a cipher created by two Belgians, Vincent Rijemn and Joan Daemen, that became AES in 2001. AES is still in use today and features a 128-bit block size with support for key sizes of 128, 192, and 256 bits. Other past NIST contests include the SHA-3 contest for a new hash standard in 2007 and the CAESAR competition for authenticated ciphers to protect against espionage and forgery in 2012. CAESAR winners were announced in March of 2019. Two contests are currently running: Lightweight Cryptography (LWC) Standardization, and Post-Quantum Cryptography Standardization. The LWC standard should provide a secure option for what the NIST calls “constrained devices”—essentially “dumber” members of the IoT that can’t run according to existing standards. Read on to learn about the Post-Quantum Cryptography Standardization contest.

A Quantum Quandary

A Quantum Quandary

It’s inevitable. What we make, we break. DES was broken in 1997 after rapidly increasing computing power enabled effective brute force attacks against what had securely been in place since the 70s. Our current standard, AES, replaced DES in 2001 after it was shown to be fundamentally insecure. With current computing power, AES is probably unbreakable. But quantum computers will likely change that by 2030, according to the NIST—and render our entire conception of encryption obsolete, including dismantling public key architecture. Right now, quantum computing is largely theoretical and existing prototypes don’t have the power to make a dent in even simple encryption, but that will change. Luckily, the NIST’s contest to standardize post-quantum cryptography is already in round 2. To have the decades-long longevity of AES, this new standard will need to far outpace even theoretical computing power and capabilities. As history shows us, encryption methods (even centuries-old mainstays) may be broken, but humans are always able to devise more. We’ll still need cryptography in order to keep sensitive information confidential, guarantee the integrity of our communications and online downloads, authenticate ourselves and our devices, and maintain network security, so it will be interesting to see what comes out of the contest.

*/

Although the history of cryptography is littered with broken codes and clever workarounds, that doesn’t mean you should fear for the integrity of your network—or your sensitive data. Discover identity and access management solutions from a trusted partner with the expertise necessary to keep your organization secure here.

Get the latest blogs on identity and access management delivered straight to your inbox.

Jeff Carpenter is Director of Cloud Authentication at HID Global. In his 15+ years in cybersecurity, Jeff has held positions with several top tier cybersecurity and technology companies including Crossmatch and RSA, a Dell Technologies company. He holds both Certified Information Systems Security Professional (CISSP) and Certified Cloud Security Professional (CCSP) designations.

A Mesopotamian Trade Secret

A Mesopotamian Trade Secret  Ancient Opposites

Ancient Opposites  Warrior Writing

Warrior Writing The Emperor's Code

The Emperor's Code A Golden Age Discovery

A Golden Age Discovery The More the Merrier While Al-Kindi was the first to publish the way to crack monoalphabetic ciphers, others followed in Europe, which meant that a new way to encode text was needed. Enter the polyalphabetic cipher, which uses multiple sets of letters in different orders to encrypt a text. Polyalphabetic encryption was first described by Leon Battista Alberti in 1467, who used a metal disk to switch between alphabets. The “tabula recta” was invented by the Benedictine monk Trithemius in 1518 and was a square table of multiple alphabets. Each row of the table showed the alphabet repeated, shifted one letter to the left. The user of this table would also be able to devise a polyalphabetic cipher. This table was used by Giovan Battista Bellaso in his treatise on an autokey cipher, which is a code that contains part of the message itself in the key. Blaise de Vigenere wrote about the same concept years later, which ended up being attributed to him rather than Bellaso, its rightful creator.

The More the Merrier While Al-Kindi was the first to publish the way to crack monoalphabetic ciphers, others followed in Europe, which meant that a new way to encode text was needed. Enter the polyalphabetic cipher, which uses multiple sets of letters in different orders to encrypt a text. Polyalphabetic encryption was first described by Leon Battista Alberti in 1467, who used a metal disk to switch between alphabets. The “tabula recta” was invented by the Benedictine monk Trithemius in 1518 and was a square table of multiple alphabets. Each row of the table showed the alphabet repeated, shifted one letter to the left. The user of this table would also be able to devise a polyalphabetic cipher. This table was used by Giovan Battista Bellaso in his treatise on an autokey cipher, which is a code that contains part of the message itself in the key. Blaise de Vigenere wrote about the same concept years later, which ended up being attributed to him rather than Bellaso, its rightful creator. Grand Deceptions

Grand Deceptions A Founding Father's Innovation

A Founding Father's Innovation The Once and Future Unbreakable Cipher

The Once and Future Unbreakable Cipher Machine Ciphers

Machine Ciphers Enigmatic Enemies

Enigmatic Enemies Going Public—Decades Later

Going Public—Decades Later War on Private Crypto

War on Private Crypto The CryptOlympics

The CryptOlympics A Quantum Quandary

A Quantum Quandary